Building a Homelab Agentic Ecosystem (Part One)

What if you could query your infrastructure like a chatbot? In Part One of this series, I build a local-first, LLM-powered agent that understands natural language and talks directly to Prometheus, Kubernetes, and Harbor... no cloud, no keys, just raw insight.

It's been while since my last article, the reason for this is that I've been rebuilding my homelab so I can develop some more interesting tools and AI related things...

My homelab's grown into a beautiful mess of metrics, pods, and dashboards. Grafana shows me everything... except when I need it most, and I forget what the metric name was. So today, I started building an agent to answer questions like "what was the highest GPU temp last week?" with plain English or "what repositories are present under the project ailabs" in my docker registry.

The twist? It runs locally. On my hardware. Using open models.

This post is Part 1 of the journey... setting up a natural language interface to query my infrastructure. Part 2 will bring memory, planning, and more agentic capabilities into the mix.

So, with all this mind I took a look at what I have running that can feed into this ecosystem:

- Kubernetes cluster - all my apps, services and AI models run in Kubernetes

- Harbor Registry - I pull a lot of commonly used images into a local registry so it's quicker to spin up larger services like vLLM model runtimes

- Prometheus - ALL the data goes into prometheus and loki

- Models running on 2 small GPU nodes:

- Ollama-me-this - An Ollama runtime with a number of available models

- Mistral vLLM - a vLLM runtime with mistral 7B openai compatible model

This immediately gives me 5 potential services that I can hook into and leverage moving forward via a singlular natural language interface without having to remember that the temperature metric for the GPU is DCGM_FI_DEV_GPU_TEMP. I do also have certain dashboards in Grafana to surface all of these but building a single dashboard to expose everything would result in 1 epic dashboard and probably take about an hour to load 🤣

The design (part one - the foundations)

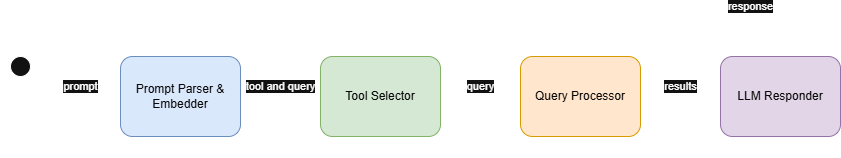

To begin with, I set out to build a simple AI toolset and response mechanism that looks as follows:

The basic design is:

- Capture a user question

- Pass the question into a tool selector

- Based on the selected tool, route it to the correct agent

- Take the response from the agent and feed that into an LLM

- Send the response back to the user

In terms of structure I went with something like:

- llm - folder that contains the llm integration clients

- tools - folder that contains the agents

- tool_router.py - main file for deciding which tool to use

- main.py - main application entry point which pulls it all together

The build

Now we have an idea of why we want to build something, how we might go about it lets dive into what I actually built...

📂 Code Repository: Explore the complete code and configurations for this article series on GitHub.

The first challenge was to find a model that would produce the queries based on user inputs, to overcome this I leveraged Ollama with a number of models running inside:

- openchat

- phi4-mini

- qwen3:8b

- mistral:7b

- llama3.1:8b

- deepseek-coder:6.7b

- dolphin-mixtral

NB: Ollama allows me to quick switch models at runtime for each available model witin the environment, especially for rapid testing and local environments.

For each of these models I ran a simple prompt to get a query back that I could then feed into prometheus and kubectl... all of the models worked pretty well but phi4-mini stood out as the most consistent so I went with this as part of our tool selector as follows:

def decide_tool(question: str) -> tuple[str, dict]:

routing_prompt = f"""

You are an infrastructure automation agent.

Given a user question, decide which tool to use and return the tool call in the following exact JSON format:

```

{{

"tool": "\<tool\_name>",

"args": "...",

"filter": {{...}} # optional, can be omitted if not needed

}}

```

Only return the JSON. No explanation, no preamble.

Available tools:

1. query_prometheus

- args: a valid PromQL query string

- Example:

{{

"tool": "query_prometheus",

"args": "avg(rate(node_cpu_seconds_total[5m]))"

}}

2. query_kubernetes

- Use this tool to get information about Kubernetes resources like pods, services, and ingresses.

- **DO NOT** generate a kubectl command string.

- Instead, provide a JSON object with the 'kind', 'namespace', and 'filter' keys.

- Supported 'kind' values are: "pods", "services", "ingresses".

- 'namespace' is optional.

- 'filter' is optional and can be used for more specific queries.

- Example 1 (all pods in a namespace):

{{

"tool": "query_kubernetes",

"args": {{

"kind": "pods",

"namespace": "kube-system"

}}

}}

- Example 2 (all pods on a specific node):

{{

"tool": "query_kubernetes",

"args": {{

"kind": "pods",

"filter": {{

"node": "k8s-cpu-worker02"

}}

}}

}}

3. query_harbor_registry

- Use this tool to get information about container images from the Harbor registry.

- Provide a JSON object with the 'operation' and other required arguments.

- Supported 'operation' values are: "list_repositories", "list_tags".

- Example 1 (list all image repositories in the 'library' project):

{{

"tool": "query_harbor_registry",

"args": {{

"operation": "list_repositories",

"project_name": "library"

}}

}}

- Example 2 (list all tags for the 'nginx' image in the 'proxies' project):

{{

"tool": "query_harbor_registry",

"args": {{

"operation": "list_tags",

"project_name": "proxies",

"repository_name": "nginx"

}}

}}

---

Question: {question}

"""

response = ask_ollama_tool_call(routing_prompt)

return parse_tool_call(response)This is basically a large system prompt that is fed into an Ollama runtime with the phi4-mini model running within it. What I get back is a json object that for the question "What was the average CPU usage across all nodes over the last 5 minutes?" looks as follows:

{

'tool': 'query_prometheus',

'args': 'avg(rate(node_cpu_seconds_total[5m])) by (instance)'

}I tested this with a number of different queries for the different tools to make sure it continued to produce the expected results then moved on to building the tools themselves.

The tools

So we have 3 tools to build:

tools/kubernetes_client.py- leverages the python kubernetes module to run the query and return the resultstools/prometheus_client.py- leverages the prometheus api endpoint to execute the query and return the resultstools/registry_client.py- leverages the harbor docker registry API to execute the query and return the results

The prometheus_client.py script looks as follows:

def run_promql_query(query):

try:

response = requests.get(

f"{PROM_URL}/api/v1/query",

params={"query": query}

)

response.raise_for_status()

data = response.json()

if data['status'] == 'success':

return data['data']['result']

else:

return {"error": data.get("error", "Unknown error")}

except requests.exceptions.HTTPError as e:

return {"error": f"HTTP Error {response.status_code}: {response.text}"}

except Exception as e:

return {"error": str(e)}

This function basically builds up a prometheus api request and handles a couple of exceptions based on the response it gets back.

An early challenge was realising my tool-use prompt was generating kubectl command strings, while my Python code was built to use the official Kubernetes client library, which expects structured data like kind='pods'. This highlighted a key principle of building agents: the 'prompt engineering' that defines the AI's output must be perfectly synchronised with the 'software engineering' of the tool it's trying to use. I went through many prompt iterations before getting to the point where it would work with the kubernetes library 🤔

Pulling it all together

To leverage all of the tools and get a formatted response back I built a simple FastAPI app that looks as follows:

app = FastAPI()

class Query(BaseModel):

question: str

@app.post("/ask")

async def ask_agent(query: Query):

question = query.question

# choose the right tool

tool_name, args = decide_tool(question)

# pass the args into the right tool and set the result

if tool_name == "query_prometheus" and args:

result = run_promql_query(args.get("command", ""))

elif tool_name == "query_kubernetes" and args:

if "kind" in args:

result = run_kubernetes_query(**args)

else:

result = {"error": "LLM failed to provide a 'kind' for the Kubernetes query."}

elif tool_name == "query_harbor_registry":

if "operation" in args:

result = query_harbor_registry(**args)

else:

result = {"error": "LLM failed to provide an 'operation' for the Harbor query."}

else:

result = {"tool": "none", "result": "Tool not recognized or no tool needed"}

# pass the result into the llm for a formatted response to the user

return ask_llm(

question=question,

data=result

)

Running this with uvicorn main:app --reload --port 8001 allows us to call the endpoint with something like:

curl -X POST http://localhost:8001/ask \

-H "Content-Type: application/json" \

-d '{"question": "What was the average CPU usage across all nodes last 5 minutes?"}'There's probably a much neater way of doing this but for now it works as the foundation ... some samples are as follows:

Registry query:

$ curl -X POST http://localhost:8001/ask \

-H "Content-Type: application/json" \

-d '{"question": "What repositories are available in the ailabs project?"}'

"The available repositories in the ailabs project are 'ollama', 'vllm-openai', and 'headline-categoriser'."Kubernetes query:

$ curl -X POST http://localhost:8001/ask \

-H "Content-Type: application/json" \

-d '{"question": "How many pods are running on running in the namespace kubeflow?"}'

" Based on the provided monitoring data, there are 38 pods running in the namespace kubeflow. These include pods such as 'admission-webhook-deployment-67fd864794-n9njk', 'katib-controller-5674c8b4d6-78l8b', 'kubeflow-pipelines-profile-controller-699dc67f96-477wm', 'ml-pipeline-65ff55599d-mxppx', 'mysql-6868b5b465-6qnng', and many others."Prometheus query:

$ curl -X POST http://localhost:8001/ask \

-H "Content-Type: application/json" \

-d '{"question": "What was the average CPU usage across all nodes last 5 minutes?"}'

"The average CPU usage over the past 5 minutes across all nodes is approximately 0.12381 (or 12.38%)."What we have here is a simple but pretty solid set of tools that can take a natural language question, determine the right tool and get a valid response back as a reply to the user' question.

Next steps

What we have now is a powerful, tool-based application that can understand a user's intent and route it to a single, correct tool. But to move towards a true agentic platform, the system needs to think for itself...

To achieve this, the next part of this project will focus on:

True Agentic Behavior (Planning & Reasoning) - Explain that the next step is to give the LLM more control. Instead of just picking one tool, the agent will need to create a plan to answer more complex questions. You could state your goal: "The agent should be able to reason about a problem, break it down into steps, and decide which tools to use in what order."

Multi-step Tool Use (Nested Queries) - This is the core of your idea and a great concept to introduce. Give a concrete example of a query that would require this. For example: a query like, "Find the pod with the highest CPU usage in the kubeflow namespace and then show me its logs" is impossible with the current system. A true agent would need to first call query_prometheus to find the pod name, and then use the output of that call as the input for a second call to query_kubernetes to fetch the logs.

Expanding the Toolkit (Giving the Agent More Power) - to fulfill these complex plans, the agent will need new, more powerful tools... including those with write-access to the cluster. The toolkit will be expanded with capabilities like:

- a tool to execute

kubectl applyto deploy a new model - a tool to interact with a cloud provider's API to provision a new worker node

- a tool to trigger a Kubeflow pipeline

- a tool to trigger a terraform and ansible playbook for autoscaling the cluster when the demand requires it

- a tool that can spin up a new model in the cluster and potential take a different one down if either GPU's is not available

- a tool to deploy a new inferenceservice for a Bert model

- a tool to trigger a Kubeflow experiment based on an existing pipeline

I might be a bit ambitious here but it's good to have a goal 😆