Google I/O '25: Mind-Blowing Fusion of AI and Creativity

Google I/O ’25 revealed Gemini’s mind-blowing AI power… from Flow’s end-to-end video creation to XR-assisted infrastructure management. Yet as AI takes on creative and technical tasks, which human skills risk fading? A forward-thinking look at promise… and potential peril.

Wow. Just... wow. If you caught the Google I/O '25 keynote, you know that Google is pushing the boundaries of what AI can do, particularly when it comes to blending powerful models and tools to unlock incredible new levels of content creation and interaction. The underlying message throughout felt clear: Gemini isn't just getting smarter; it's becoming the central intelligence that helps disparate tools work together in ways that feel nothing short of revolutionary.

The keynote demoed capabilities rolling out in the near future (days, weeks, months) that genuinely make you lean back and rethink what's possible. It wasn't just about better answers; it was about turning ideas into reality, making complex tasks simple, and interacting with technology in profoundly natural ways.

If you want to watch it before I spoil it, go and check out the keynote on youtube, (jump to about 1hr 7 mins for the actual start)

The Core Engine: Gemini's Relentless Progress

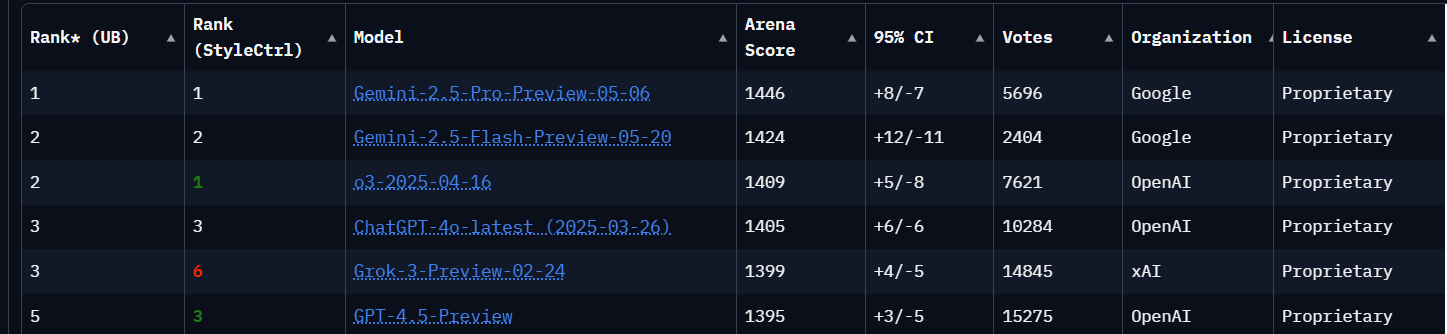

At the heart of this surge in capability is the rapid advancement of the Gemini models. We heard about Gemini 2.5 Pro sweeping leaderboards and setting new benchmarks in coding, as well as the highly efficient 2.5 Flash model.

Powering this is the new TPU called Ironwood which delivers 42.5 exaflops of compute per pod (9,216 chips per pod) ... IKR... designed for thinking and inference at scale, promising a 10x performance increase over the previous generation of TPU chips. This foundation is crucial, enabling faster models at more effective price points. But the real magic happens when this intelligence is combined with specialised tools.

Unleashing Creative Power: Music, Images, and Video

Imagine you're a one-person creative studio, racing against the clock yet bursting with ideas.

You start by strumming a few chords into Lyria RealTime and within seconds, it's improvising harmonies, adding professional-grade vocals and ambient textures that feel tailor-made for your vision. Excited by that soundscape, you pivot to visuals... a quick prompt in Imagen 4 conjures a moody, high-resolution album cover, complete with nuanced lighting and crisp typography that would have taken hours to design by hand. Finally, you drop your storyboard into Flow, where under the hood Veo 3 weaves together your audio and images into a 30-second film clip, animating characters with realistic physics and even generating native sound effects and dialogue from your brief.

What once demanded days of editing, layering and post-production now unfolds in under an hour... proof that when Gemini's multimodal might meets specialised tools, the creative process becomes a seamless, end-to-end conversation between you and your AI collaborator.

Perfect for Youtube intro / outtro vidoes or even streaming cutscenes 😄

Intelligence, Agents, and Personalisation Across Products

Imagine you're kicking off a new microservice and need to go from idea to running in production... all before lunch.

You fire off a prompt in AI Mode: "Generate a Terraform script for a secure AKS cluster with auto-scaling and logging enabled." Within seconds, Gemini fans out to your private GitHub repos and HashiCorp docs, then returns a tested, style-compliant Terraform file ready to apply. No more digging through boilerplate or wrestling with YAML indentation... your cluster spins up while you draft the README.

Next, you hit up Agent Mode in the Gemini app: "Deploy that cluster, roll out the Docker image from our CI pipeline, run the integration tests and alert me if any fail." In a single exchange, your AI agent executes kubectl commands, monitors test logs for errors and opens tickets in Jira if something goes sideways... all without you switching windows.

But it still feels personal. Because Gemini has your back: by tapping into your project's Style Guide in Drive and your past pull-request comments in Gmail, every code snippet and commit message aligns with your team's conventions. Your change logs read like your own voice, and your CI summaries echo the context you'd normally write yourself.

Finally, you strap on Android XR glasses in the server room. As you peer at the rack, live overlays show pod statuses, CPU heat maps and network traces across your topology... all colour-coded and annotated in real time. Tap a virtual console window to tail logs or trigger rollbacks with a voice command, all while pacing between the racks.

It's seamless, end to end... but here's the question... if AI handles infra provisioning, deployment choreography and even on-the-fly debugging, how do we keep our own troubleshooting instincts sharp? When the glasses guide us through network failures, where do we learn to read packet captures ourselves?

Beyond the Keynote: Accelerating Discovery and Impact

The keynote also touched upon how AI is accelerating scientific discovery (AlphaProof, AlphaFold 3) and making real-world impact in areas like wildfire detection (Firesat), disaster relief (Wing drones), and accessibility (Project Astra with Aira). These highlight the broader vision of using AI for good.

The key takeaway is clear: by combining the intelligence of Gemini with a growing suite of tools and capabilities... multimodal understanding, agentic actions, personalisation, and integration into platforms like Android XR... Google is creating an AI ecosystem that can not only answer questions but actively help you create, explore, and get things done in new and exciting ways.

Many of these "mind-blowing" capabilities are rolling out very soon, marking a significant step in the evolution of AI assistance and creativity tools. The era of AI not just understanding the world, but helping us reshape it and interact with it more naturally, seems truly upon us. Get ready.

The Convenience - Capability Trade-off

Now for the controversial bit... while all of this excites me greatly, especially when you bring these tools and capabilities together like they did with Project Astra or teast me with me a cool new piece of tech like the Glasses XR ... there's something niggling at me...

If these tools and others like it can take on all of our research, problem solving and search capabilities then what's left? By that I mean, as kids and young professionals we learnt through trial and error, breaking many many things but then fixing it so we learnt how things worked, the Astra demo is a great example of how these skills are not necessarily required moving foward in favour of following along to a video and a willingness to give things a try... if it breaks then AI will fix it for you... I suppose you could argue it's more efficient to know exactly what needs to be done, what exact nuts are required and even let someone else make orders on your behalf (yeah it was very cool tbh) but these are removing skills from us as professionals aren't they?

All this power comes with potential pitfalls i.e. if AI can…

- manage my orders, screen and take calls, summarise conversations and even negotiate routine requests, where do I hone interpersonal skills

- conduct research, generate agendas, schedule meetings and send invites across timezones, where do I develop planning, organisation and diplomatic communication skills

- draft and refine presentations, analyse data and visualise insights, where do I learn to interpret findings and craft a compelling narrative

- plan travel, book accommodation and handle expense reports, where do I build logistics savvy, budgeting know-how and on-the-fly problem-solving

- tutor students in coding, language learning and exam prep, where do learners cultivate resilience, creativity and independent thinking

I'm a huge fan of AI and use it in some shape or another at least a few times per day but I can't help thinking that I'm being diminished as a result of relying on it for anything serious...

Looking ahead

If we take a lot of the skills we know now and infuse AI with them we get something like:

- Computational Creativity (creativity) - ideation becomes a dialogue between person and model, where prompt-crafting and iterative critique sharpen originality

- AI-Augmented Orchestration (planning and prioritisation) - orchestrating projects across people, AI agents and data pipelines, setting objectives that balance human values with machine efficiency

- Ethical Digital Judgment (decision making and negotiations) - making principled decisions amid black-box outputs i.e. spotting bias in generative results, negotiating privacy trade-offs and taking responsibility for AI-driven actions

- Multimodal Emotional Fluency (emotional awareness) - reading and responding to nuanced signals across text, audio, video and XR i.e. guiding AI to convey tone appropriately and interpreting AI-augmented feedback in social contexts

- Adaptive Meta-Learning (personal and professional learning) - constantly updating one's own mental models as tools evolve, reflexively questioning both human and machine assumptions

- Interdisciplinary Synthesis (cross domain knowledge) - using AI to surface connections between, say, biology and economics, then crafting coherent narratives that bridge both

- Resilience in Hybrid Workflows - maintaining focus and agency when tasks shift between analogue and digital i.e. knowing when to let AI take the lead and when to lean in manually

By 2035, "creativity" won't just be painting or writing, it will be the ability to spark, steer and refine AI-generated ideas. "Planning" won't stop at drawing Gantt charts ... it will involve setting up autonomous agents with guardrails and fallback protocols. And "communication" will span prompting, labelling and calibrating multimodal outputs as much as face-to-face dialogue.

None of these can be taught by lectures alone. They emerge through hands-on, project-based experiences where students:

- coach an AI collaborator to develop a prototype, then reflect on its biases

- run live simulations that blend VR scenarios with real-world problem solving

- debate and defend algorithmic decisions in mock policy boards

In other words, soft skills in an AI-first world will be active, iterative practices... more like sports drills than essays... ensuring learners remain masters of both the question and maybe even the answer.

All that being said, Google I/O 2025 showed us that it's certainly an exciting time for the world of tech, and I can't wait to be part of it... no matter what happens 😎